Search: gpt3 elon musk writes destroy humans 0 results found

MOST POPULAR

FEATURED STORIES

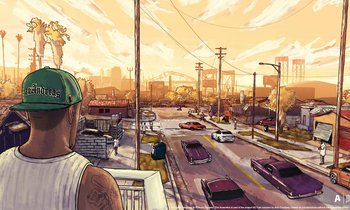

GTA 6 Won't Kill GTA Online: Take-Two Confirms Continued Support

Free Fire MAX Redeem Codes February 11, 2026: Get Free Diamonds with 100% Working Codes

Genshin Impact 6.3 Columbina Ultimate Build Guide

How Popular is Free Fire in India in 2026?